Unlocking the Power of Data Integration: Best Practices and Tools

Data integration is a critical process for businesses looking to leverage their data assets to make informed decisions, improve operations, and gain a competitive edge. In this blog we’ll explore the best practices for data integration and highlight some of the most effective tools available today.

Understanding Data Integration

Data integration involves combining data from different sources into a single, unified view. This process is essential for organizations that want to consolidate their data to improve analytics, enhance decision-making, and streamline operations. Effective data integration ensures that data is accurate, consistent, and accessible across the organization.

Key Benefits of Data Integration:

- Improved Data Quality: Ensures that data is consistent and accurate across different systems.

- Enhanced Decision-Making: Provides a comprehensive view of data, enabling better insights and decisions.

- Operational Efficiency: Streamlines processes by reducing the need for manual data handling.

Best Practices for Data Integration

1. Define Clear Objectives

Before embarking on a data integration project, it’s important to define clear objectives. Understand what you aim to achieve with data integration, whether it’s improving data quality, enabling real-time analytics, or enhancing operational efficiency.

2. Choose the Right Integration Method

There are several methods for data integration, including ETL (Extract, Transform, Load), ELT (Extract, Load, Transform), data replication, and data virtualization. Choose the method that best suits your organization’s needs and data architecture.

3. Ensure Data Quality

Data quality is paramount in data integration. Implement data cleansing processes to remove duplicates, correct errors, and standardize data formats. This ensures that integrated data is accurate and reliable.

4. Use Scalable Tools

Select data integration tools that can scale with your organization’s growth. As data volumes increase, the chosen tools should be able to handle the additional load without compromising performance.

5. Maintain Data Security

Data security is crucial when integrating data from multiple sources. Implement robust security measures to protect sensitive information and ensure compliance with data privacy regulations.

6. Monitor and Optimize

Continuously monitor the data integration process to identify and resolve issues promptly. Optimize performance by fine-tuning integration workflows and addressing bottlenecks.

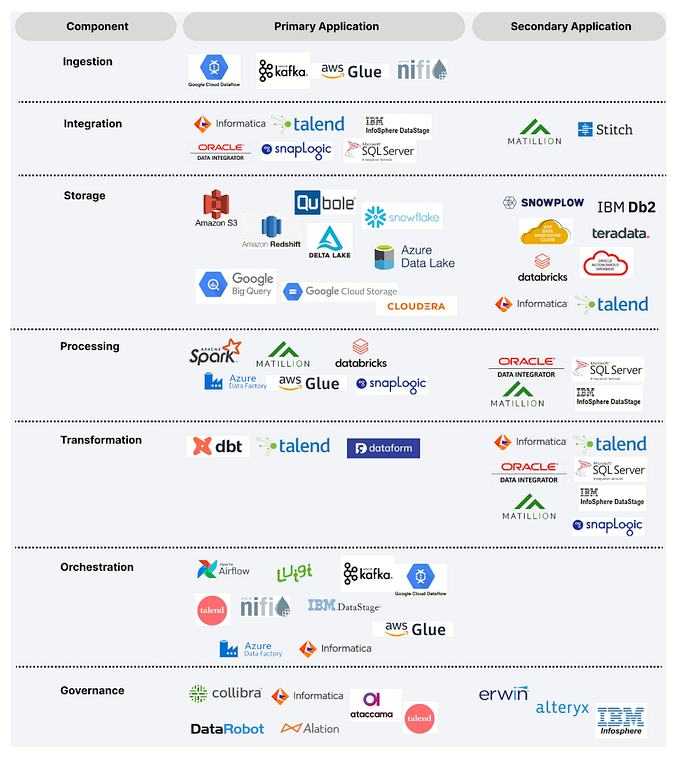

Top Data Integration Tools

1. Apache Nifi

Apache Nifi is an open-source tool designed for data flow automation. It provides a user-friendly interface for designing data pipelines and supports a wide range of data sources and destinations.

Key Features:

- Visual Interface: Drag-and-drop interface for designing data flows.

- Scalability: Handles large volumes of data with ease.

- Security: Supports encryption, access controls, and data provenance.

2. Talend

Talend is a comprehensive data integration platform that offers tools for ETL, data quality, and data governance. It supports cloud, on-premises, and hybrid environments.

Key Features:

- Versatility: Supports a wide range of data sources and formats.

- Data Quality: Includes tools for data cleansing and validation.

- Cloud Integration: Seamlessly integrates with cloud platforms like AWS, Azure, and Google Cloud.

3. Informatica PowerCenter

Informatica PowerCenter is a powerful data integration tool known for its high performance and reliability. It provides extensive connectivity options and advanced transformation capabilities.

Key Features:

- Performance: Optimized for handling large datasets and complex transformations.

- Connectivity: Connects to a variety of data sources, including databases, applications, and cloud services.

- Data Governance: Offers robust data governance and metadata management features.

4. Microsoft Azure Data Factory

Azure Data Factory is a cloud-based data integration service that allows you to create, schedule, and orchestrate data pipelines. It supports ETL and ELT processes and integrates seamlessly with other Azure services.

Key Features:

- Cloud-Native: Fully managed service with built-in scalability.

- Integration: Integrates with a wide range of data sources and Azure services.

- Automation: Supports scheduling and automation of data workflows.

Conclusion

Data integration is a vital process for organizations looking to maximize the value of their data. By following best practices and leveraging the right tools, businesses can ensure that their data integration efforts are successful, leading to improved data quality, better decision-making, and enhanced operational efficiency. Whether you’re just starting your data integration journey or looking to optimize existing processes, the strategies and tools highlighted in this blog will help you achieve your goals.